History of the Cluster

Rationale and Requirements for a new cluster

Anunna; previously called 'The Agrogenomics Cluster' was originally conceived as being the 7th pillar of the Breed4Food programme. While the other six pillars revolve around specific research themes, the Cluster represents a joint infrastructure. The rationale behind the cluster is to enable the increasing computational needs in the field of genetics and genomics research, by creating a joint facility that will generate benefits of scale, thereby reducing cost. In addition, the joint infrastructure is intended to facilitate cross-organisational knowledge transfer. In that capacity, the HPC-Ag acts as a joint (virtual) laboratory where researchers - academic and applied - can benefit from each other's know-how. Lastly, the joint cluster, housed at Wageningen University campus, allows retaining vital and often confidential data sources in a controlled environment, something that cloud services such as Amazon Cloud or others usually can not guarantee.

Process of acquisition and financing

The Agrogenomics cluster was financed by CATAgroFood. The IT-Workgroup formulated a set of requirements that in the end were best met by an offer from Dell. ClusterVision was responsible for installing the cluster at the Theia server centre of FB-ICT.

Architecture of the cluster

Main Article: Architecture of the Agrogenomics HPC

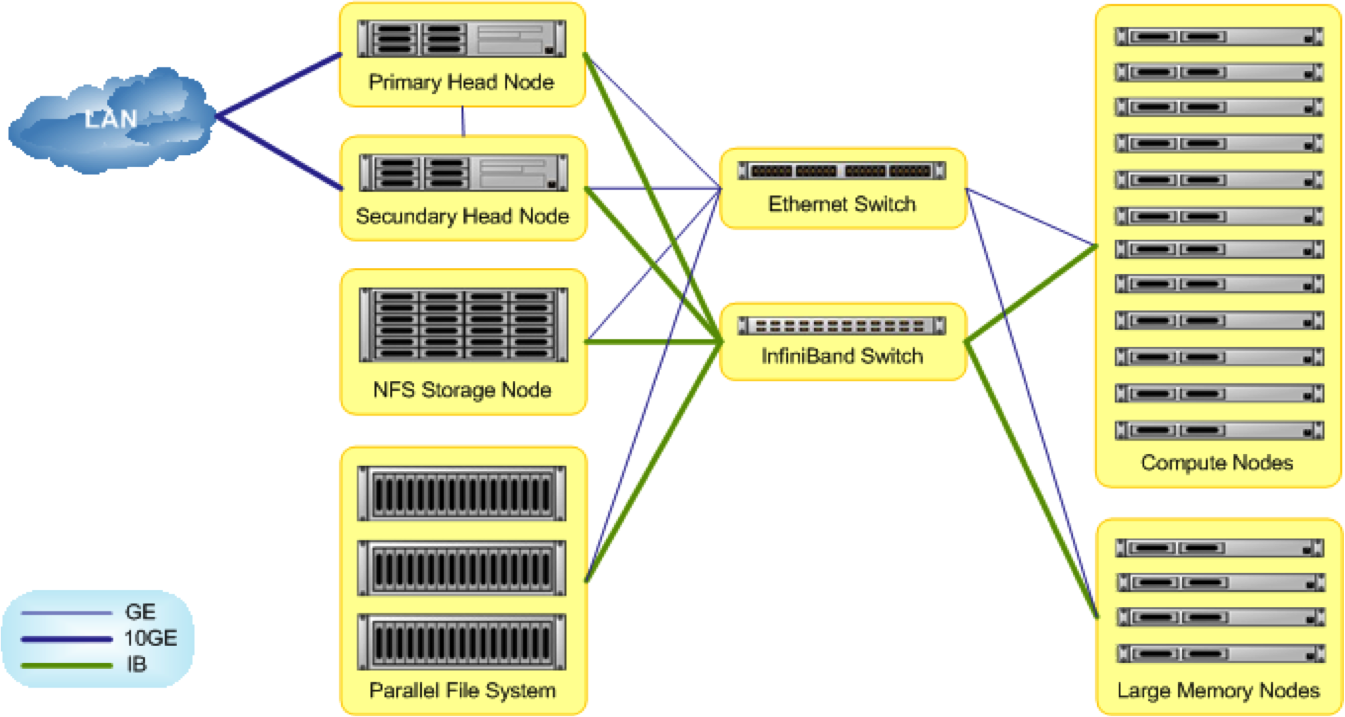

The new Agrogenomics HPC has a classic cluster architecture: state of the art Parallel File System (PSF), headnodes, compute nodes (of varying 'size'), all connected by superfast network connections (Infiniband). Implementation of the cluster will be done in stages. The initial stage includes a 600TB PFS, 48 slim nodes of 16 cores and 64GB RAM each, and 2 fat nodes of 64 cores and 1TB RAM each. The overall architecture, that include two head nodes in fall-over configuration and an infiniband network backbone, can be easily expanded by adding nodes and expanding the PFS. The cluster management software is designed to facilitate a heterogenous and evolving cluster.