Anunna: Difference between revisions

| Line 54: | Line 54: | ||

The B4F Cluster is housed at the main server centre of WUR-FB-ICT, near Wageningen Campus. The building (Theia) may not look like much from the outside (used to function as potato storage) but inside is a modern server centre that includes emergency power backup systems and automated fire extinguishers. Many of the server facilities provided by FB-ICT that are used on a daily basis by WUR personnel and students are located there, as is the B4F Cluster. | The B4F Cluster is housed at the main server centre of WUR-FB-ICT, near Wageningen Campus. The building (Theia) may not look like much from the outside (used to function as potato storage) but inside is a modern server centre that includes emergency power backup systems and automated fire extinguishers. Many of the server facilities provided by FB-ICT that are used on a daily basis by WUR personnel and students are located there, as is the B4F Cluster. | ||

{{-}} | {{-}} | ||

[[File:Cluster2_pic.png|thumb|left|200px]] | [[File:Cluster2_pic.png|thumb|left|200px|Some components of the cluster after unpacking.]] | ||

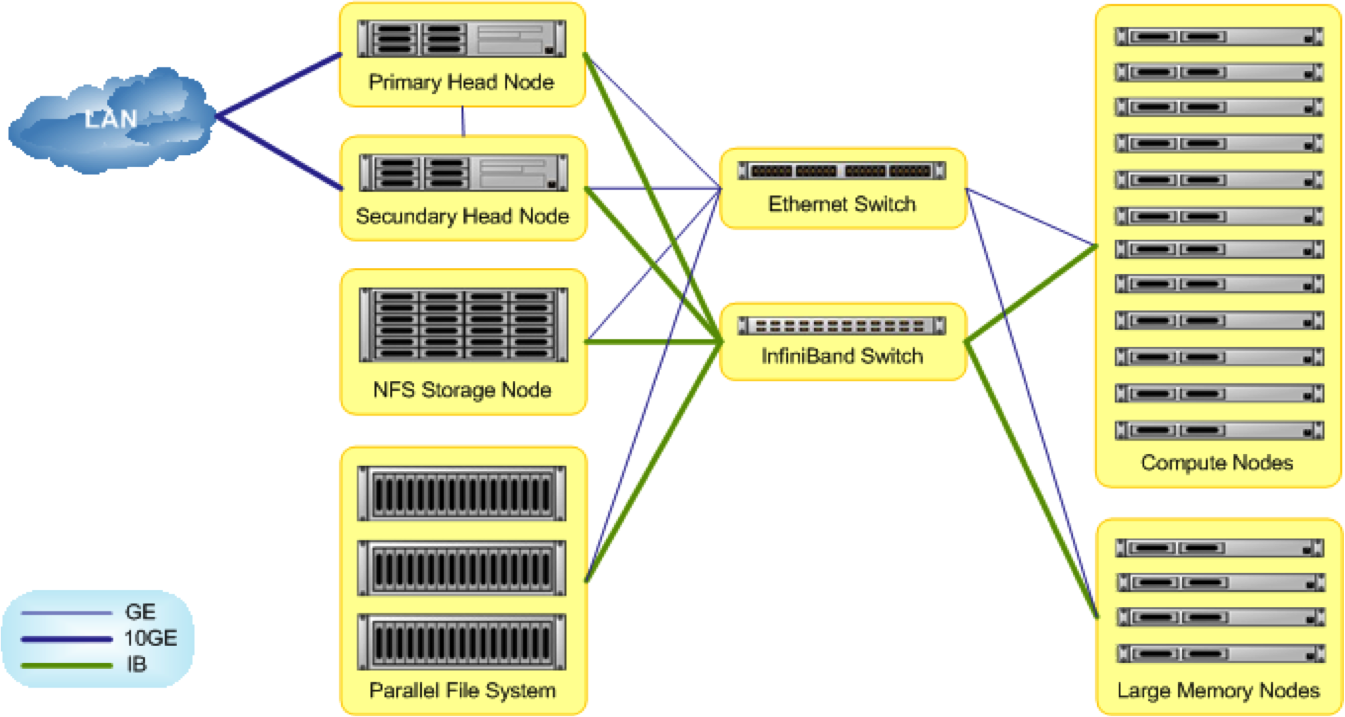

The final configuration after installation. | The final configuration after installation. | ||

[[File:Cluster_pic.png|thumb|right|400px]] | [[File:Cluster_pic.png|thumb|right|400px]] | ||

Revision as of 21:13, 23 November 2013

The Breed4Food (B4F) cluster is a joint High Performance Compute (HPC) infrastructure of the Animal Breeding and Genomics Centre (WU-Animal Breeding and Genomics and Wageningen Livestock Research) and four major breeding companies: Cobb-Vantress, CRV, Hendrix Genetics, and TOPIGS.

Rationale and Requirements for a new cluster

The B4F Cluster is, in a way, the 7th pillar of the Breed4Food programme. While the other six pillars revolve around specific research themes, the Cluster represents a joint infrastructure. The rationale behind the cluster is to enable the increasing computational needs in the field of genetics and genomics research, by creating a joint facility that will generate benefits of scale, thereby reducing cost. In addition, the joint infrastructure is intended generate cross-organisational knowledge transfer. In that capacity, the B4F Cluster acts as a joint (virtual) laboratory where researchers across the isle (academic and applied) can benefit from each other's know how. Lastly, the joint cluster, housed at Wageningen University campus, allows retaining vital and often confidential data sources in a controlled environment, something that cloud services such as Amazon Cloud or others usually can not guarantee.

Process of acquisition and financing

The B4F cluster was financed by CATAgroFood. The IT-Workgroup formulated a set of requirements that in the end were best met by an offer from Dell. ClusterVision was responsible for installing the cluster at the Theia server centre of FB-ICT.

Architecture of the cluster

The new B4F HPC is a classic cluster architecture: state of the art Parallel File System (PSF), headnodes, compute nodes (of varying 'size'), all connected by superfast internet connections (Infiniband). Implementation of the cluster will be done in stages. The initial stage includes a 600TB PFS, 48 slim nodes of 16 cores and 64GB RAM each, and 2 fat nodes of 64 cores and 1TB RAM each. The overall architecture, that include two head nodes in fall-over configuration and an infiniband network backbone, can be easily expanded by adding nodes and expanding the PFS. The cluster management software is designed to facilitate a heterogenous and evolving cluster.

nodes

The cluster consists of a bunch of separate machines that each has its own operating system. The default operating system throughout the cluster is RHEL6. The cluster has two master nodes in a redundant configuration, which means that if one crashes, the other will take over seamlessly. Various other nodes exist to support the two main file systems (the Lustre parallel file system and the NFS file system). The actual computations are done on the worker nodes. The cluster is configured in a heterogeneous fashion: it consists of 48 so called 'slim nodes', that each have 16 cores and 64GB of RAM (called 'node001' through 'node060'; not all the node names actually refer to nodes), and two so called 'fat nodes' that each have 64 cores and 1TB of RAM ('fat001' and 'fat002').

Information from the Cluster Management Portal, as it appeared on November 23, 2013:

DEVICE INFORMATION Hostname State Memory Cores CPU Speed GPU NICs IB Category master1, master2 UP 67.6 GiB 16 Intel(R) Xeon(R) CPU E5-2660 0+ 2199 MHz 5 1 node001..node042, node049..node054 UP 67.6 GiB 16 Intel(R) Xeon(R) CPU E5-2660 0+ 1200 MHz 3 1 default node043..node048, node055..node060 DOWN N/A N/A N/A N/A N/A N/A N/A default mds01, mds02 UP 16.8 GiB 8 Intel(R) Xeon(R) CPU E5-2609 0+ 2400 MHz 5 1 mds storage01 UP 67.6 GiB 32 Intel(R) Xeon(R) CPU E5-2660 0+ 2200 MHz 5 1 oss storage02..storage06 UP 67.6 GiB 32 Intel(R) Xeon(R) CPU E5-2660 0+ 2199 MHz 5 1 oss nfs01 UP 67.6 GiB 8 Intel(R) Xeon(R) CPU E5-2609 0+ 2400 MHz 7 1 login fat001, fat002 UP 1.0 TiB 64 AMD Opteron(tm) Processor 6376 2299 MHz 5 1 fat

Filesystems

The B4F Cluster has two primary file systems, each with different properties and purposes.

Parallel File System: Lustre

At the base of the cluster is an ultrafast file system, a so called Parallel File System (PFS). The current size of the PFS is around 600TB. The PFS implemented in the B4F Cluster is called Lustre. Lustre has become very popular in recent years due to the fact that it is very feature rich, deemed very stable, and is Open Source. Lustre nowadays is the default option for PFS in Dell clusters as well as clusters sold by other vendors. The PFS is mounted on all head nodes and worker nodes of the cluster, providing a seamless integration between compute and data infrastructure. The strength of a PFS is speed - the total I/O should be up to 15GB/s. by design. With a very large number of compute nodes - and with very high volumes of data - these high read-write speeds that the PSF can provide are necessary. The Lustre filesystem is divided in several partitions, each differing in persistence and backup features. The Lustre PSF is meant to store (shared) data that is likely to be used for analysis in the near future. Personal analysis scripts, software, or additional small data files can be stored in the $HOME directory of each of the users.

Network File System (NFS): $HOME dirs

Each user will have his/her own home directory. The path of the home directory will be:

/home/[name partner]/[username]

/home lives on a so called Network File System, or NFS. The NFS is separate from the PFS and is far more limited in I/O (read/write speeds, latency, etc) than the PFS. This means that it is not meant to store large datavolumes that require high data transfer or small latency. Compared to the Lustre PFS (600TB in size), the size of the NFS is small in comparison - only 20TB. The /home partition will be backed up daily.

Network

The various components - head-nodes, worker nodes, and most importantly, the Lustre PFS - are all interconnected by an ultra-high speed network connection called InfiniBand.

Housing at Theia

The B4F Cluster is housed at the main server centre of WUR-FB-ICT, near Wageningen Campus. The building (Theia) may not look like much from the outside (used to function as potato storage) but inside is a modern server centre that includes emergency power backup systems and automated fire extinguishers. Many of the server facilities provided by FB-ICT that are used on a daily basis by WUR personnel and students are located there, as is the B4F Cluster.

The final configuration after installation.

Management

Project Leader

- Stephen Janssen (WUR-FB-ICT)

Daily Project Management

- Koen Pollmann (WUR-FB-ICT)

- Andre ten Böhmer (WUR-FB-ICT)

Steering Group

Ensures that the HPC generates enough revenues and meets the needs of the users. This includes setting fees, developing contracts, attracting new users, decisions on investments in the HPC and communication.

- Frido Hamoen (CRV, on behalf of Breed4Food industrial partners, replaced Alfred de Vries in August)

- Petra Caessens (CAT-AgroFood)

- Wojtek Sablik (WUR-FB-ICT)

- Edda Neuteboom (CAT_AgroFood, secretariat)

- Johan van Arendonk (Wageningen UR, chair).

IT Workgroup

Is responsible for the technical performance of the HPC. The IT-workgroup has been involved in the design of the HPC and the selection of the supplier. They will support the technical management of the HPC and share experiences to ensure that the HPC meets the needs of its users. The IT-workgroup will advise the steering group on investments in software and hardware.

- Stephen Janssen (WUR-FB-ICT)

- Koen Pollmann (WUR-FB-ICT)

- Andre ten Böhmer (WUR-FB-ICT)

- Wes Barris (Cobb)

- Ton Dings (Hendrix Genetics)

- Henk van Dongen (Topigs)

- Frido Hamoen (CRV)

- Mario Calus (ABGC-WLR)

- Hendrik-Jan Megens (ABGC-ABG)

User Group

The User Group ultimately is the most important of all groups, because it encompasses the users for which the infrastructure was built. In addition, successful use of the cluster will rely on an active community of users that is willing to share knowledge and best practices, including maintenance and expansion of this Wiki. Regular User Group meetings will be held in the future [frequency to be determined] to facilitate this process.

Access Policy

Access policy is still a work in progress. In principle, all staff and students of the five main partners will have access to the cluster. Access needs to be granted actively (by creation of an account on the cluster by FB-ICT). Use of resources is limited by the scheduler. Depending on availability of queues ('partitions') granted to a user, priority to the system's resources is regulated.

Contact Persons

A request to access the cluster needs to be directed to one of the following persons (please refer to appropriate partner):

Cobb-Vantress

- Wes Barris

- Jun Chen

ABGC

Animal Breeding and Genetics

Wageningen Livestock Research

- Mario Calus

- Ina Hulsegge

CRV

- Frido Hamoen

- Chris Schrooten

Hendrix Genetics

- Ton Dings

- Abe Huisman

- Addie Vereijken

Topigs

- Henk van Dongen

- Egiel Hanenbarg

- Naomi Duijvensteijn

Cluster Management Software and Scheduler

The B4F cluster uses Bright Cluster Manager software for overall cluster management, and Slurm as job scheduler.