Architecture of the HPC: Difference between revisions

Created page with "thumb|right|600px|Schematic overview of the cluster. The new Agrogenomics HPC has a classic cluster architecture: state of the art Parallel File Sy..." |

m <source> to <pre> to fix code blocks |

||

| Line 48: | Line 48: | ||

/home lives on a so called [http://en.wikipedia.org/wiki/Network_File_System Network File System], or NFS. The NFS is separate from the PFS and is far more limited in I/O (read/write speeds, latency, etc) than the PFS. This means that it is not meant to store large datavolumes that require high data transfer or small latency. Compared to the Lustre PFS (600TB in size), the size of the NFS is small in comparison - only 20TB. The /home partition will be backed up daily. The amount of space that can be allocated is limited per user. Personal quota and total use per user can be found using (200GB soft and 210GB hard limit) : | /home lives on a so called [http://en.wikipedia.org/wiki/Network_File_System Network File System], or NFS. The NFS is separate from the PFS and is far more limited in I/O (read/write speeds, latency, etc) than the PFS. This means that it is not meant to store large datavolumes that require high data transfer or small latency. Compared to the Lustre PFS (600TB in size), the size of the NFS is small in comparison - only 20TB. The /home partition will be backed up daily. The amount of space that can be allocated is limited per user. Personal quota and total use per user can be found using (200GB soft and 210GB hard limit) : | ||

< | <pre> | ||

quota -s | quota -s | ||

</ | </pre> | ||

The NFS is supported through the NFS server (nfs01) that also serves as access point to the cluster. | The NFS is supported through the NFS server (nfs01) that also serves as access point to the cluster. | ||

Latest revision as of 15:50, 15 June 2023

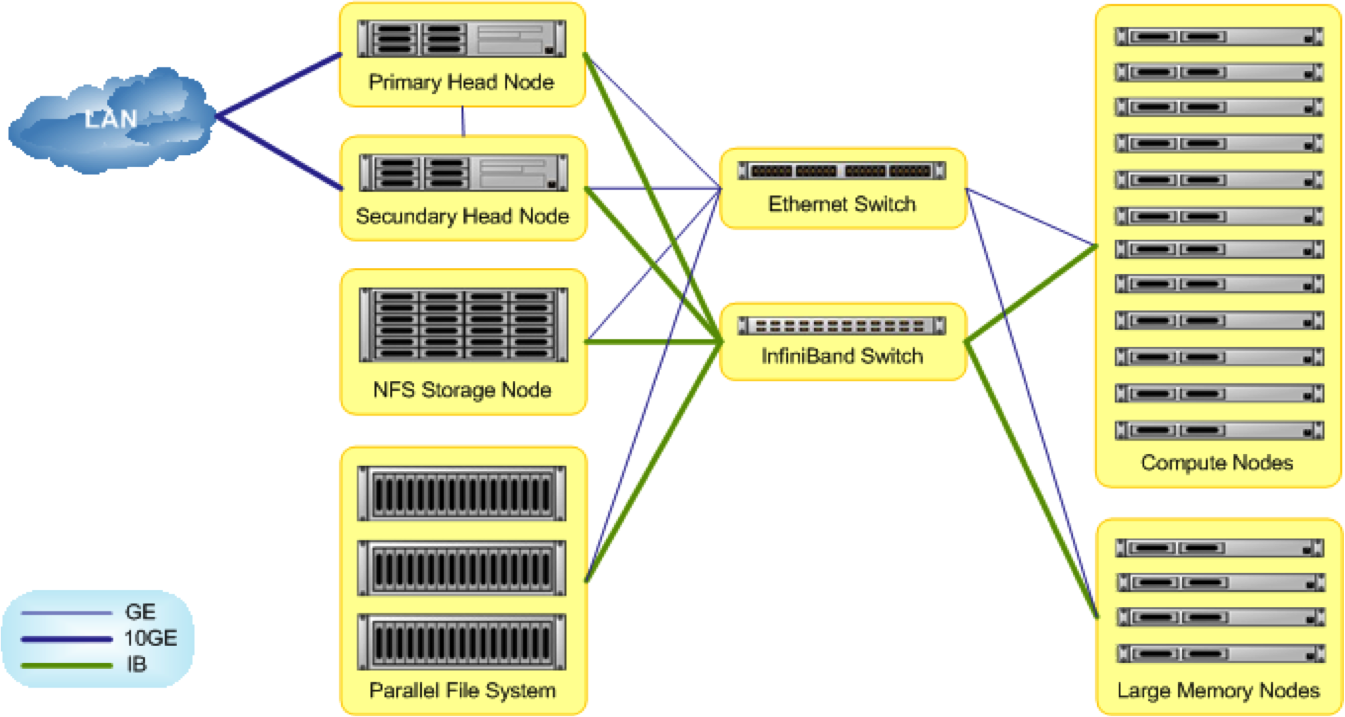

The new Agrogenomics HPC has a classic cluster architecture: state of the art Parallel File System (PSF), headnodes, compute nodes (of varying 'size'), all connected by superfast network connections (Infiniband). Implementation of the cluster will be done in stages. The initial stage includes a 600TB PFS, 48 slim nodes of 16 cores and 64GB RAM each, and 2 fat nodes of 64 cores and 1TB RAM each. The overall architecture, that include two head nodes in fall-over configuration and an infiniband network backbone, can be easily expanded by adding nodes and expanding the PFS. The cluster management software is designed to facilitate a heterogenous and evolving cluster.

Nodes

The cluster consists of a bunch of separate machines that each has its own operating system. The default operating system throughout the cluster is Scientific Linux version 6. Scientific Linux (SL) is based on Red Hat Enterprise Linux (RHEL), which currently is at version 6. SL therefore follows the versioning scheme of RHEL.

The cluster has two master nodes in a redundant configuration, which means that if one crashes, the other will take over seamlessly. Various other nodes exist to support the two main file systems (the Lustre parallel file system and the NFS file system). The actual computations are done on the worker nodes or compute nodes. The cluster is configured in a heterogeneous fashion: it consists of 48 so called 'slim nodes', that each have 16 cores and 64GB of RAM (called 'node001' through 'node060'; note that not all node names map to physical nodes), and two so called 'fat nodes' that each have 64 cores and 1TB of RAM ('fat001' and 'fat002').

Information from the Cluster Management Portal, as it appeared on June 26, 2014:

DEVICE INFORMATION

Hostname State Memory Cores CPU Speed GPU NICs IB Category

node001..node002 UP 67.6 GiB 16 Intel(R) Xeon(R) CPU E5-2660 0+ 2200 MHz 3 1 default

node049..node054 UP 67.6 GiB 16 Intel(R) Xeon(R) CPU E5-2660 0+ 2200 MHz 3 1 default

master1 master2 UP 67.5 GiB 16 Intel(R) Xeon(R) CPU E5-2660 0+ 2199 MHz 5 1 custom

mds01, mds02 UP 16.8 GiB 8 Intel(R) Xeon(R) CPU E5-2609 0+ 2399 MHz 5 1 mds

storage01..storage06 UP 67.6 GiB 32 Intel(R) Xeon(R) CPU E5-2660 0+ 2200 MHz 5 1 oss

nfs01 UP 67.6 GiB 8 Intel(R) Xeon(R) CPU E5-2609 0+ 2399 MHz 7 1 login

fat001 fat002 UP 1.0 TiB 64 AMD Opteron(tm) Processor 6376 2300 MHz 5 1 fat

Main cluster node configuration:

- Master nodes: 2 PowerEdge R720 master nodes in a failover configuration, which also will share some applications and databases with machines in the cluster, for which the parallel file system is not the ideal solution.

- The NFS server is a PowerEdge R720XD. The NFS node will also act as a login node, where users log in and compile applications and submit jobs and share each home directory via nfs.

- 50 compute nodes

- 12x Dell PowerEdge C6000 enclosures, each containing four nodes

- 48x Dell PowerEdge C6220; 16 Intel Xeon cores, 64GB RAM each

- 2x Dell R815; 64 AMD Opteron cores, 1TB RAM each

Hyperthreading is disabled in compute nodes.

Filesystems

The Agrogenomics Cluster has two primary file systems, each with different properties and purposes.

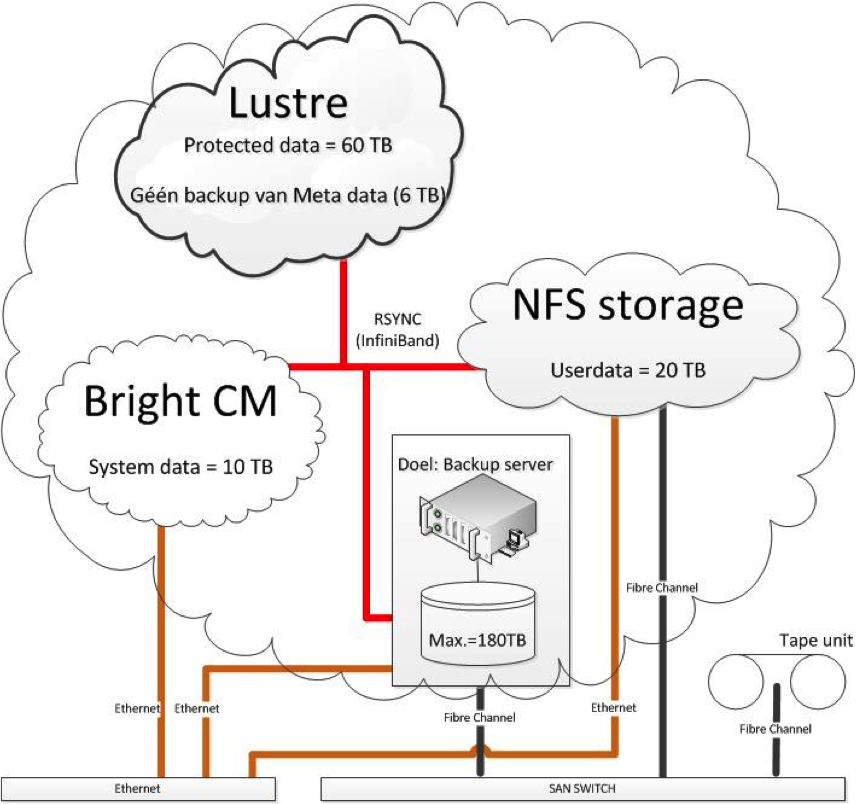

Parallel File System: Lustre

At the base of the cluster is an ultrafast file system, a so called Parallel File System (PFS). The current size of the PFS is around 600TB. The PFS implemented in the Agrogenomics Cluster is called Lustre. Lustre has become very popular in recent years due to the fact that it is very feature rich, deemed very stable, and is Open Source. Lustre nowadays is the default option for PFS in Dell clusters as well as clusters sold by other vendors. The PFS is mounted on all head nodes and worker nodes of the cluster, providing a seamless integration between compute and data infrastructure. The strength of a PFS is speed - the total I/O should be up to 15GB/s. by design. With a very large number of compute nodes - and with very high volumes of data - these high read-write speeds that the PSF can provide are necessary. The Lustre filesystem is divided in several partitions, each differing in persistence and backup features. The Lustre PSF is meant to store (shared) data that is likely to be used for analysis in the near future. Personal analysis scripts, software, or additional small data files can be stored in the $HOME directory of each of the users.

The hardware components of the PFS:

- 2x Dell PowerEdge R720

- 1x Dell PowerVault MD3220

- 6x Dell PowerEdge R620

- 6x Dell PowerVault MD3260

Network File System (NFS): $HOME dirs

Each user will have his/her own home directory. The path of the home directory will be:

/home/[name partner]/[username]

/home lives on a so called Network File System, or NFS. The NFS is separate from the PFS and is far more limited in I/O (read/write speeds, latency, etc) than the PFS. This means that it is not meant to store large datavolumes that require high data transfer or small latency. Compared to the Lustre PFS (600TB in size), the size of the NFS is small in comparison - only 20TB. The /home partition will be backed up daily. The amount of space that can be allocated is limited per user. Personal quota and total use per user can be found using (200GB soft and 210GB hard limit) :

quota -s

The NFS is supported through the NFS server (nfs01) that also serves as access point to the cluster.

Hardware components of the NFS:

- 1x Dell PowerEdge R720XD

- 1x Dell PowerVault MD3220

Network

The various components - head-nodes, worker nodes, and most importantly, the Lustre PFS - are all interconnected by an ultra-high speed network connection called InfiniBand. A total of 7 InifiniBand switches are configured in a fat tree configuration.